Howdy! I am a 5th-year Ph.D. student in Computer Engineering at Texas A&M University. My research began with image and video restoration, where I explored efficient recovery using sequence models such as Transformers and Mamba.

Recently, my focus has taken a foray into 3D/4D scene reconstruction, especially the single-forward methods such as VGGT. One of my recent work, Stylos, has been accepted by ICLR 2026 with top 1.3% pre-rebuttal scores.

I am actively seeking research internship opportunities for Summer 2026 and Fall 2026. If you are aware of relevant openings or potential referrals, please feel free to contact me at heyhanzhou@gmail.com.

🔥 News

- 2026.01: 🎉🎉 Our paper Stylos is now accepted by ICLR 2026.

- 2025.08: 🎉🎉 I have joined the Urban Resilience Lab as a research assitant with cooperation with Resilitix AI.

- 2025.04: 🎉🎉 XYScanNet has been accepted by NTIRE CVPR 2025. See you in Nashville.

- 2024.02: 🎉🎉 Mamba4rec has been selected as the Best Paper Award for KDD’24 Resource-efficient Learning for Knowledge Discovery Workshop (RelKD’24).

📝 Publications

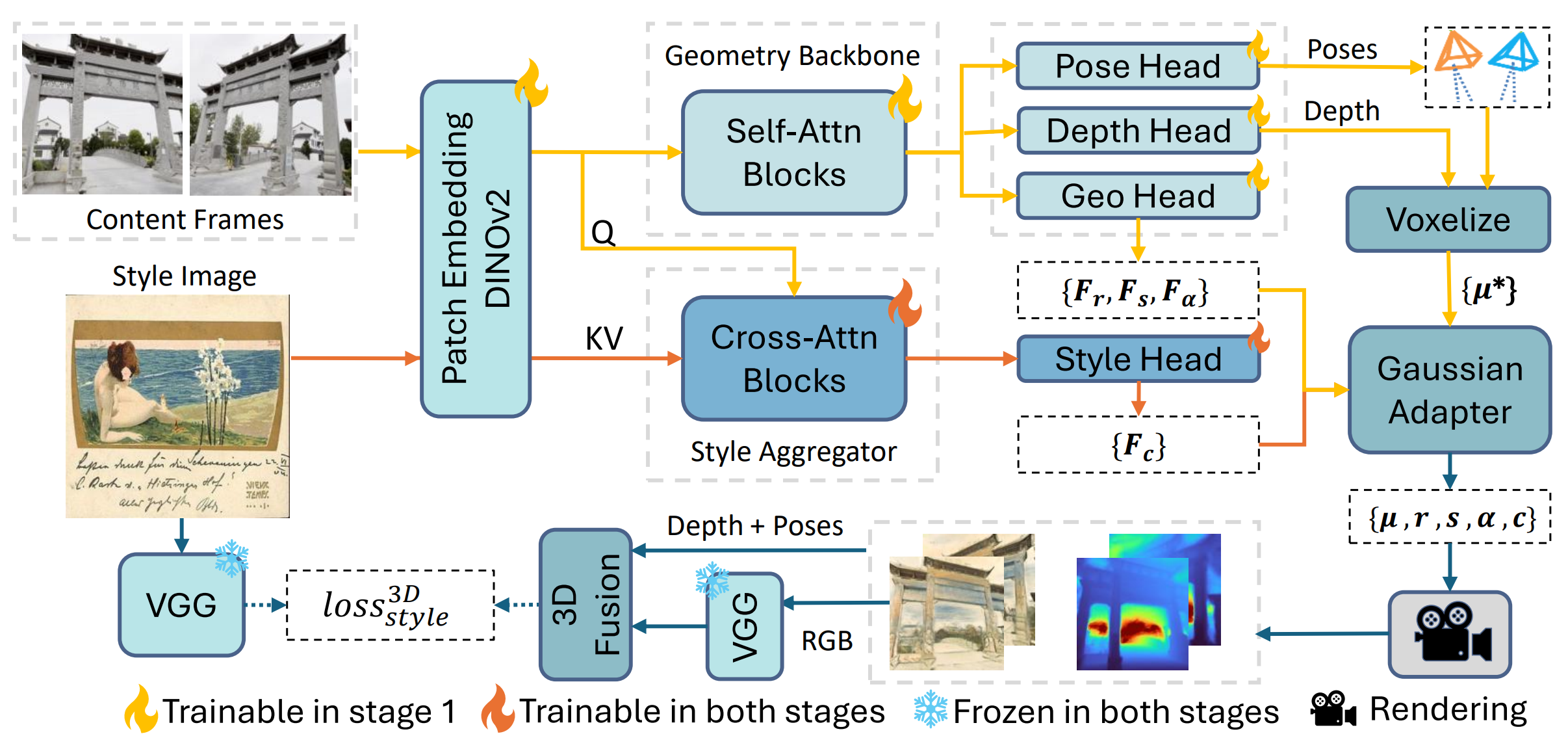

Stylos: Multi-View 3D Stylization with Single-Forward Gaussian Splatting

Hanzhou Liu*, Jia Huang, Mi Lu, Srikanth Saripalli, Peng Jiang* †, ICLR 2026

- Stylos couples VGGT with Gaussian Splatting for cross-view style transfer, introducing a voxel-based style loss to ensure multi-view consistency.

XYScanNet: A State Space Model for Single Image Deblurring

Hanzhou Liu, Chengkai Liu, Jiacong Xu, Peng Jiang, Mi Lu, NTIRE CVPR 2025

- XYScanNet, maintains competitive distortion metrics and significantly improves perceptual performance. Experimental results show that XYScanNet enhances KID by 17% compared to the nearest competitor.

-

Mamba4rec: Towards efficient sequential recommendation with selective state space models

Chengkai Liu, Jianghao Lin, Jianling Wang, Hanzhou Liu, James Caverlee, RelKD KDD 2024 Best Paper Award -

Behavior-Dependent Linear Recurrent Units for Efficient Sequential Recommendation

Chengkai Liu, Jianghao Lin, Hanzhou Liu, Jianling Wang, James Caverlee, CIKM 2024 -

Real-world image deblurring via unsupervised domain adaptation

Hanzhou Liu, Binghan Li, Mi Lu, Yucheng Wu, ISVC 2023

📖 Educations

- 2021.08 - now, Texas A&M University, PhD in Computer Engineering.

- 2019.08 - 2021.06, Texas A&M University, MS in Computer Engineering.

- 2014.08 - 2018.06, Jilin University, BS in Electrical Engineering.

💬 Invited Talks

- 2024.07, Mamba4Rec, invited talk at Uber.

🧾 Community Services

- 2025, Reviewer, New Trends in Image Restoration and Enhancement workshopin conjunction with CVPR 2025 (NTIRE).

- 2024, Reviewer, IEEE/CVF Winter Conference on Applications of Computer Vision (WACV).

- 2024, Reviewer, The Conference on Information and Knowledge Management (CIKM).